Solr 4.0 (quietly?) introduced an interface called PostFilter which basically allows you to "post filter" the results that match the main query and all filters (i.e. "filter queries"). There are a few good articles about this:

However, I wanted to contribute to the article space on this topic to bring more attention to this awesome feature as it didn’t seem to be heavily advertised and I think it’s one of the more powerful features introduced in Solr 4.0. The best understood example of this in Solr 4 is to filter results based on a user specified distance criteria (i.e. find me results within X miles of me) and can also be used to implement ACL as per both Yonik and Erik’s articles. My use of PostFiltering is to collect score statistics to ultimately calculate search score distributions which I’ll describe later in the article.Background

If you’ve ever peeked under the covers to see how Solr and Lucene work, especially with respect to results generation and filtering, you’ll notice a few things:

-

Solr filter queries (fq) are all independently generated up front. Basically each fq will yield a BitSet (of size = number of total documents each entry with 0/1 to dictate that this document passed the filter)

-

This makes filter query caching important as having this cached can improve search performance so that these BitSets can be retrieved from memory as opposed to being constantly re-generated.

PostFilter overview

Simply put, a "post filter" is a filter query implementation that extends the PostFilter interface (who in turn extends the ExtendedQuery interface). To get started:

-

Write a class that extends the ExtendedQueryBase class implementing the PostFilter interface.

Set the "cost", with higher cost filters being executed last (cost must be > 100).

-

Optionally, when implementing the post filter, you can specify whether or not its results are cached although most of the time caching the results of a post filter doesn’t make sense..

While there is a nextDocument collector.collect(docId) //This in turn calls the scorer. EndThis is how the post filtering happens because the aforementioned game of "leap frog" between the filter and the main query happens in the "while there is a next document" phase. In the delegate collector, if some condition is not met, you can choose to *skip* the collection process by choosing not to invoke super.collect()! This is the where the magic of "post filtering" happens!

ResultSet Score Statistics

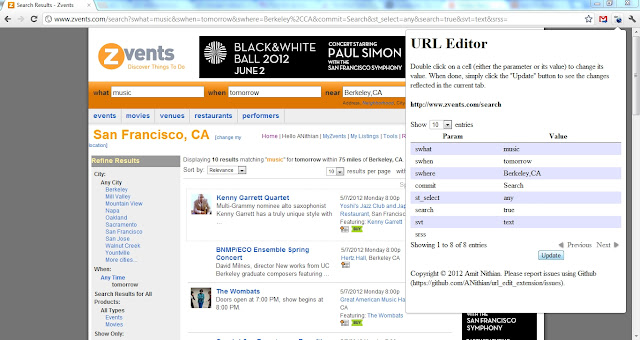

So you may be wondering why does all this matter for computing the score statistics. At Zvents.com (where I work), we have a federated search product where you can search for something (say "music") and the results are a blended mix of results from our events, venues, restaurants, performers, and movies categories. Each category is its own index (Solr core) and hence must be searched independently with the results blended together. The problem is that the scoring formulas for each category are vastly different hence the score ranges themselves can differ wildly (The #1 venue could have score of 30 while the #1 movie could have score of 10) so the question becomes how to normalize these scores to properly blend them.

Our solution to this was to use the Z score ( (score – avg) / stddev) which tells you how many standard deviations from the mean this score is (assuming a normal distribution which is fairly common). Now you can blend these scores together since now each score has a particular meaning. The challenge though is how to produce these simple statistics such as the mean and standard deviation so these scores can be properly blended.

Implementation

Solr PostFilter to the rescue! Recall that the delegate collector is invoked for each document that matches the query and filters and also recall that since this DelegatingCollector extends Collector you can also implement a custom scorer. So now if you implement a custom scorer that wraps the original scorer being set and capture the score on each collected document, you can now calculate the statistics necessary to produce a normalized score later. For us, "later" is in our federated search component that queries the multiple cores and blends the results together computing a normalized score based on these score statistics stored in the results object.

If you visit http://www.github.com/Zvents/score_stats_component, you’ll find a completely usable implementation of what was described earlier. There are unit tests so you can understand what it’s doing. Basically what happens is:

-

The ScoreStatsComponent will create an instance of the ScoreStatsPostFilter and add it to the list of already existing filters (or create a new list and add).

The ScoreStatsPostFilter will return an instance of DistributionCalcCollector in the getCollector() method and the DistributionCalcCollector will return a delegate scorer DistributionCalcScorer which will keep track of the necessary statistics from invoking the scorer being wrapped.

In the process() method of the ScoreStatsComponent, the calculated statistics are extracted by searching the filter list for the ScoreStatsPostFilter instance and returned in the results.

<lst name="scoreStats"> <float name="min">0.45898002</float> <float name="max">37.544556</float> <float name="avg">19.001766</float> <float name="stdDev">10.813288</float> <long name="numDocs">100</long> <float name="sumSquaredScores">47799.43</float> <float name="sumScores">1900.1766</float> </lst>

Notes/Gotchas/Outstanding issues

Since these statistics are generated during query execution, there are a few situations when these statistics won’t be generated even if explicitly asked:

-

When the query hits the queryResultCache and returns the results straight from the cache. To remedy this, setup a parallel "scoreStatsCache" of at least the same size/parameters. The component will check the statistics to see if they are valid (i.e. not NaN) and if the statistics are invalid, consult the "scoreStatsCache" using the same cache key generation as core Solr to fetch the statistics for the query.

a. To define this user cache, simply add this to your solrConfig.xml:

<cache name="scoreStatsCache" class="solr.LRUCache"

size="512"

initialSize="512"

autowarmCount="0"/>

When explicitly sorting the results that don’t include the "score" field. Scoring is an expensive process and if the user is requesting that the documents be explicitly sorted by a field not including the score, then the scorer doesn’t get called and hence the statistics won’t be computed.

-

There are scores generated but I am not sure how to get at them in a manner similar to what this component does already. If you have a solution, please submit a pull request!

Conclusion

Using Solr 4.0’s new PostFilter interface, you can implement custom scoring and collecting that previously could only be done by modifying the core Solr/Lucene source. In my opinion, this is one of the more powerful and yet underrated features of Solr 4 but it can open up more possibilities when implementing your search engine. This method of filtering is great when standard filters are expensive to compute. Often times, this can mean computationally expensive (in the case of distance filters) or if you have to consult some external data source for information (filter results to show only users who are "online").

I want to thank the Solr community for helping me come to this conclusion of using Solr’s PostFIlter interface. I posted a question about this question of computing score stats on the mailing list receiving an answer the next day about using the PostFilter interface. This was a huge help and I am hoping that this article helps others in the same way. As always, if there are questions/comments/inaccuracies or things that needs clarifying, please drop a comment and I’ll do my best to respond promptly!