It’s an amazing statistic. Two-thirds of American workers are dissatisfied with their current job and since most people spend one-third of their day working, it is an absurdly high percentage of life spent being unhappy. Golden handcuffs only make things worse because the cost of leaving can have serious financial consequences.

When our acquisition finally closed in early 2012, I thought that I’d be part of something special and enjoy the perks of being a member of a new team considered to be the innovative arm of a successful business organization. Unfortunately, within the first quarter, some key people and close colleagues of mine left. The most shocking announcement came when the key person who led our acquisition announced his resignation a few months later leaving us without a leader and champion. The remainder of 2012 ended up being unproductive as my team and I dealt with increasing politics, fighting production fires, and constantly training a stream of new people on the complexities of our system.

Steve Jobs said in his inspiring 2005 Stanford commencement address, "If today were the last day of my life, would I want to do what I am about to do today? And whenever the answer has been "No" for too many days in a row, I know I need to change something.” The last time I said “no” to this question was when I resigned in March 2013 and as I reflected on this decision, I identified three key reasons why I gave up the remainder of my retention package.

Culture Fit

While this section could be an entire post given its importance, I’ll break down the differences between the pre- and post-acquisition culture into three main points:- Openness and transparency

- Hiring philosophy

- Trust / Freedom from hierarchy

Openness and transparency

This is very important for a (small) startup as everyone is working towards the same goal and has a vested interest in the outcome. When we were a startup, we had regular meetings where the CEO and leadership team gave updates on the status of various aspects of the business. At the end of each sprint, we would gather in our common area to demo the different features being shipped. While we had different mailing lists for product, engineering, and operations, generally anyone was welcome to be a member of any mailing list.After the acquisition and once the startup-minded leadership left, it was clear that the new management wanted to protect their various territories and close off the flow of information between teams. For example, the new Director of Engineering (a long time alumnus from the acquiring company) removed product management from the engineering mailing lists and told our Director of Product (who was a member of the acquisition) to stay out of engineering’s affairs which didn't foster the happy partnership that is supposed to exist between the two groups. A short time later, he removed client services/account management, and for a while, most of engineering, from the operations mailing list.

What ensued was added stress and chaos as communication that was once open among teams started to break down. Operation alerts normally visible to client services and engineering went unnoticed because our operations team was too busy fighting other fires. Customer-facing teams were clueless about decisions and changes made to the website by engineering. Regular team-wide status meetings stopped and because we were a small team in a larger organization, it was never clear what the direction of our team was and why we were even there.

Hiring philosophy

As a startup, we had high standards and we asked tough phone and in-person coding interview questions to ensure that we were only bringing in smart people capable of delivering big things to move the company forward. After our acquisition; however, it was clear that we had to hire indiscriminately to spend the “use it or lose it” quarterly budget. This significantly lowered the hiring standards (despite management's claim to the contrary) and eventually those of us who stuck to our beliefs on conducting tough interviews were excluded from the interview panel.

Trust and freedom from (rigid) hierarchy

When I joined my startup as a new engineer, I was free to make decisions around how to implement assigned features and even refactored significant portions of our codebase if necessary. My manager, also an individual contributor, was too busy to concern himself with the minutiae of what I was doing and trusted that I would ask for help when necessary. Since all code commits were automatically emailed to a mailing list (i.e. openness and transparency), he would let me know if anything was off so I could address it. This made for a great working relationship as I was able to quickly learn our complicated system and gain confidence to make bigger technical decisions.

Unfortunately, after our acquisition, this empowerment quickly went away despite the fact that I had a significant amount of experience with our product, customers, and the technology stack. My manager felt that because he was my superior and titled as the Director of Engineering/Technology that he could dictate technical direction despite my objections as a technical lead. While I was perceived and considered by others to be a technical lead, I was not given the freedom to actually make technical decisions about the product which I led. The breaking point came when I pushed to use a Scala based Hadoop library which made writing Map-Reduce jobs easier. I urged a new colleague to learn and implement a simple task assigned to him using this Scala based library which I saw he enjoyed as it provided him an opportunity to learn something new and resolve a nagging JIRA task at the same time. A win-win all around.

Within a few weeks, my manager told me that we were not to use Scala and that we had to use Java. He considered this Scala library “too new” with no books on the subject, a risk to our technology stack (despite its increasing popularity) and that hiring developers who know Scala was hard. Although in the end, he assured me that Scala was being considered by the “god-like” architecture team for future projects. To me this translated to “Don’t take risks. Stick to Java because there are tons of people whom we can hire and we are unable to attract people who are smart and willing to work outside of their comfort zone in Scala.”

Not being challenged

The technology landscape changes too rapidly to be stuck doing the same things in the same technology stack or worse (as can be the case with an acquisition) being valued only because you can maintain the current (and possibly dated) technology stack.My biggest fear was being comfortable in an organization that did not promote innovation where I was working with colleagues who did not motivate and challenge me. There were no brown bag lunches on interesting research in computer science or cool open source projects that could be applied to the problems we, as a business unit, needed to solve. Introduction of new frameworks, databases, and technologies were seen as “risks” because they were too new and we couldn’t hire people smart enough to work on them. I rarely saw any of my colleagues attend meetups on new technologies or present their work at any major technical conference. Furthermore, we didn’t employ any core “seeds” (i.e. Doug Cutting, Guido van Rossum, James Gosling etc.) who could attract the kind of talent needed to really propel a company/group forward.

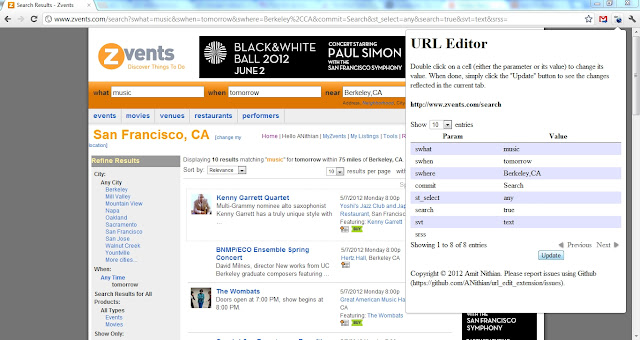

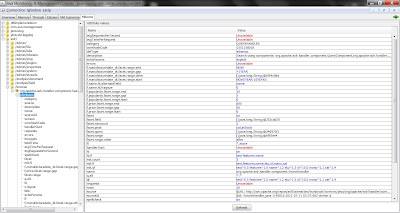

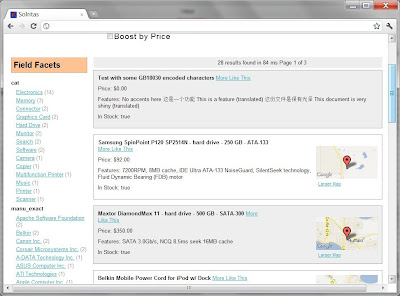

Startups (and those who work at them) are risk takers and promote taking reasonable risks as the rewards can be huge and the pace of work can be challenging and gratifying. For example, in 2006, rather than using the traditional PHP to build the website, our founders used Rails 0.x, a huge risk at the time. Furthermore, the founders used (the newly released) Apache Solr to power the site’s search capabilities and later in 2007 our CTO said that in order to scale like Google, we needed to use/build the infrastructure that Google had, so he introduced the use of Hadoop (relatively new at the time) and hired engineers to build our own NoSQL database. When I joined a few months later, this project was maturing and slowly became core to our analytics/reporting stack. We also hired consultants to train our engineering team on Hadoop which was huge as now Big Data is everywhere and centered around Hadoop (and its numerous related technologies).In four years at a startup I learned more than what some people take more than ten years to learn at a big company. In short, I needed to be with people who challenged me and where I could constantly learn and grow.

Opportunity cost

Opportunity cost refers to the highest value of an alternative choice (or path) not taken. Generally every decision will have an opportunity cost (sometimes well known, other times hypothetically derived) and you have to decide whether or not that cost is too high. I knew the tangible benefits of staying (salary, bonus, stock grants); however, the opportunity cost of staying was high since I was not growing nor learning. Furthermore, my professional network wasn't expanding and the chances to work on things that were worthy of writing and presenting were few and far between.I also realized that the costs of simply being a senior/lead engineer (with specific focus in search and big data) in a technically weak organization was quickly increasing. In 2009, when I first attended the SF Hadoop meetups, I felt that I was generally ahead of others (in terms of experience and working knowledge of the related technologies) as I was explaining stuff to those who were new to Hadoop. Over time, I saw everyone else catching up and soon, I was the one listening to others explain things to me like a newbie.

I knew that remaining in such an organization would cost me more than my package was worth in the long run both because of a continued decline in technical currency and the decline in the quality of my professional network, my two most valuable professional assets.

Conclusion

* Image courtesy of http://flavorscientist.com/2012/10/13/a-one-year-birthday-the-story-of-flavorscientist-com/